ML Kit is a collection of API’s that can be used to integrate machine learning processing into your own applications. Including computer vision and natural language API’s, the package is tailored towards usability and ease of access, while maintaining most of the processing under the hood. It can work with the Camera X API, which allows it to process both still and real time images. We already have utilized it in one of our blogs, where we implemented the face detection API onto the VIA VAB-950.

If you have not already, make sure you have Android Studio downloaded onto your PC.

Installing the ML Kit

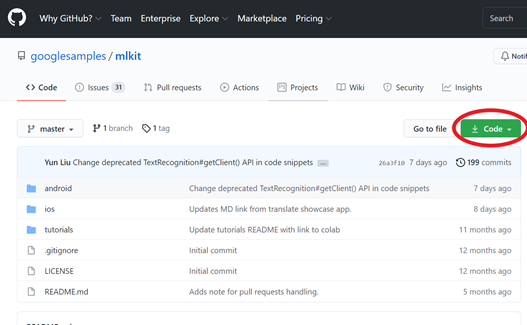

Go to the ML Kit GitHub, and download the code onto your PC.

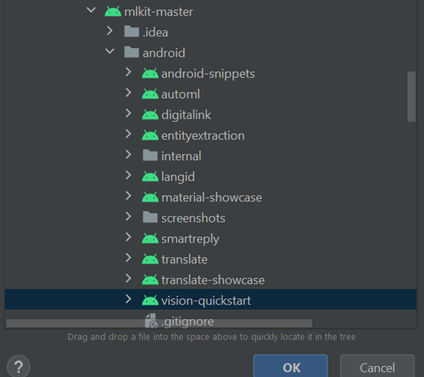

This repository includes all the code for IOS and Android, and within Android it has separate sections for Kotlin and Java code programs. We will be using exclusively Java code for this tutorial, and further tutorials in the near future.

When the file has finished downloading, extract it to somewhere easily accessible or a location well-known to you, such as your desktop or downloads folder.

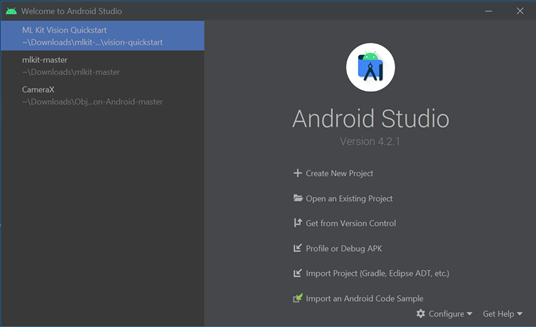

Next, open Android Studio and click on “Open an Existing Project” then find the “mlkit-master” folder you extracted before. Within this, you should be able to find the “vision-quickstart” file:

After opening the project, you may get the error: :”app:proguardRuntimeClasspath’. Could not create task ‘:app:minifyProguardWithR8’. Cannot query the value of this provider because it has no value available”. This is caused by the IDE using the wrong SDK build-tools version, which is easily rectifiable.

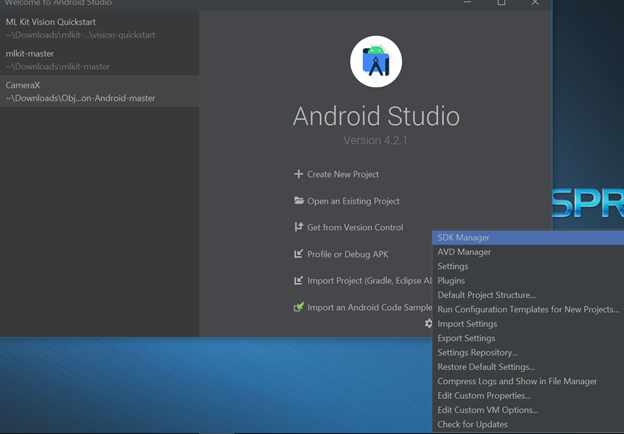

Firstly, we need to close the project to go back to the Android Studio home screen, by selecting “File” and “Close Project”. This should take you back to this window:

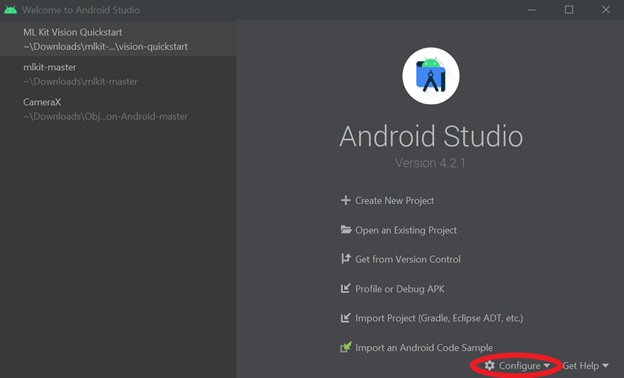

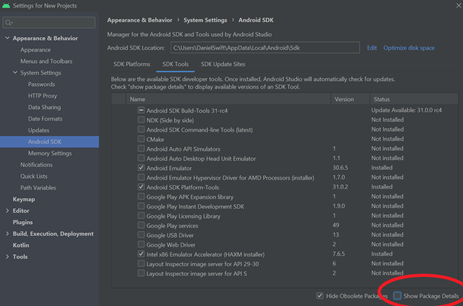

Then, click on the “Configure” button in the bottom right, which should open a pull down menu. Select the first entry in this menu, labelled “SDK Manager”:

In the new window, go to the “SDK Tools” tab, and select the “Show Package Details checkbox in the bottom right:

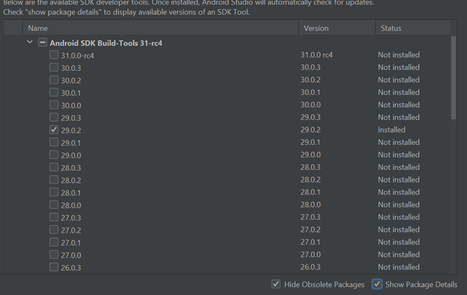

Under the first item on the list, named “Android SDK Build-Tools 31-rc4”, you should see a sublist of the different versions for it. Select version 29.0.2, and deselect whatever version it is currently on:

As a disclaimer, the SDK Build-Tool version may change in the future, the sample code is still being updated by Google.

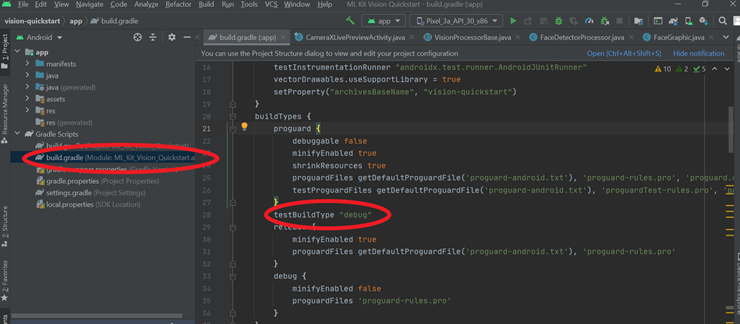

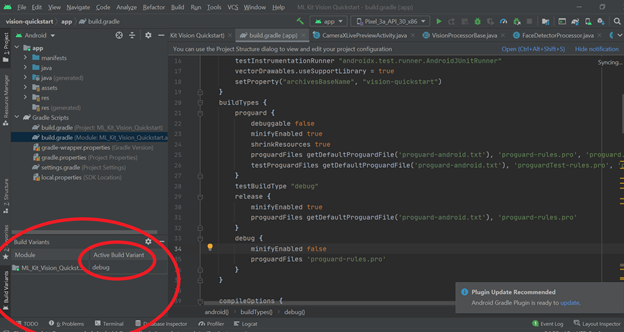

Now, if you enter back into the project, it should build without any errors. However, there are still some changes to the gradle files that we need to make before we are able to execute the program on the VAB-950. Enter the module build.gradle, which can be found under “Gradle Scripts”, and scroll to “buildTypes{…}”. You will see the line ‘testBuildType “proguard”’. Change the “proguard” to “debug”:

That should be everything you need to do. The program should now be executable and will run without problems on the VIA VAB-950. It is worth checking, however, that the setting in the “Build Variants” section of the IDE is set to “debug” as well. On the bottom left side of the IDE, you will see a tab labelled “Build Variants. When clicked on, it will open a small window with a two-header table, the headers being “Module” and “Active Build Variant”. Make sure the setting under the latter header is set to “debug” rather than “proguard”. If you need to change it, just select the cell and find the “debug” option from the pull down menu.

That’s it. You should now have everything installed and ready to use when programming ML features onto the VIA VAB-950. The vision-quickstart app as a whole is an excellent implementation of the ML Kit and a great reference if you want to develop your own apps using an API. You can find more in-depth information regarding the kit on Google’s website here, and the GitHub issues page if you face any more issues with getting it to run on Android Studio is available here.