The new VIA SOM-9X35 is our latest platform for accelerating the development of edge AI devices. The module is powered by the MediaTek Genio 350 quad-core processor and supports Yocto 3.1 and Android 10. When paired with the VIA VAB-935 carrier board, it provides the performance and functionality required for creating intelligent vision applications for retail, commercial, medical and industrial environments.

To provide an example of the kinds of applications that are possible with the VIA SOM-9X35 module, this blog shows how you can use the platform to create an Edge AI device for facemask detection.

Facemask Detection on Android

APIs and Libraries

The easiest way to start when building an application that utilizes AI and computer vision is to make use of the multitude of APIs (Application Programming Interfaces) that are freely available for anyone online. APIs allow developers to work with in-depth, accurate neural network models without having to produce a lot of complicated code from scratch. APIs are normally developed by large companies and are well documented, making them easy to learn and work with.

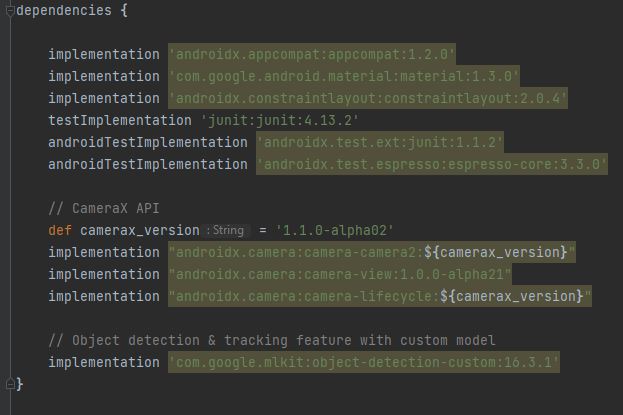

For instance, Google has developed two APIs that are very useful for facemask detection. Firstly, the CameraX API has been developed in order to make it easier to work with camera applications. Obviously, this is essential to an application that uses computer vision, and it allows us to work with the camera module bundled with the VIA SOM-9X35 Starter Kit. Secondly, Google’s ML Kit provides a suite of APIs for different aspects of machine learning, for instance face detection or object recognition.

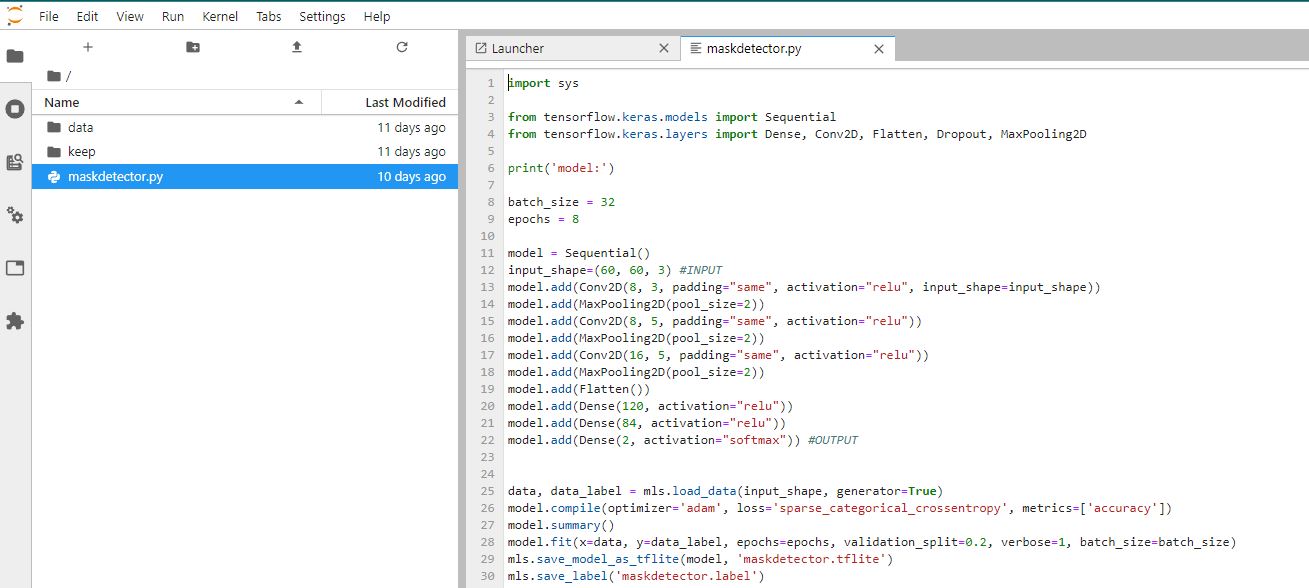

Finally, TensorFlow is used to train and build the models needed to detect if a mask is being worn or not. This is a large library, and there is a lot to learn when working with it, but this process is made easier by numerous tools. Firstly, Google Colab has plenty of tutorials on implementing TensorFlow and gives free access to Google’s GPU resources, meaning that the processor-intensive task of training models is lessened. For training smaller models, VIA has produced a very useful tool, originally for the VIA Pixetto, called the Machine Learning Accelerator, which generates TensorFlow code for you.

Dataset Generation

For facemask detection, it is likely that you will have to create your own dataset in order to build a custom model. Unfortunately, TensorFlow does not have a specific dataset for people wearing and not wearing facemasks, but some datasets that other people have created can be found after a little digging online, for instance this dataset.

It is useful to use images that have lots of different people, with multiple genders, hairstyles and ethnicities, using many different angles. This is to prevent the model from overfitting, and will make it more accurate when trying to classify a diverse range of people. The more data you collect, the more accurate the model will be. However, be warned that this can increase the size and complexity of the model, making it longer to train.

If you want to use the ML Kit custom object detection API, be sure to follow their specific guidelines when creating your own model, or the API won’t be able to work with it. Most models that you generate for these tasks should be tflite (TensorFlow Lite) models

Using Java to create a Facemask Detection App

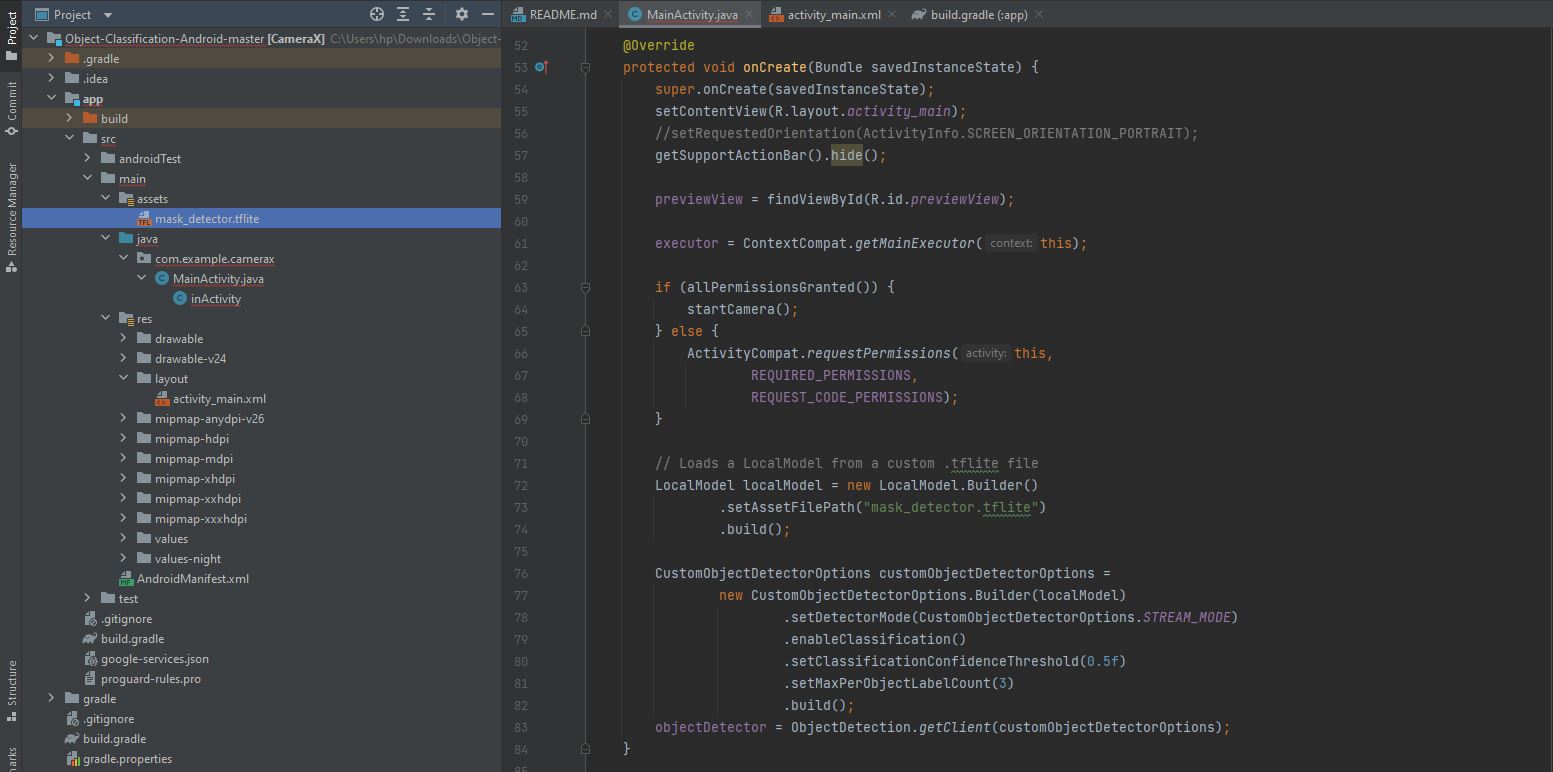

As with any project, there are a few ways to implement this application. In Android Studio an easy to way to get the camera working is with the CameraX API, and then bind it with an image analyser using the Google ML Kit API to process it. Make sure to put the model in the “assets” folder of your Android Studio project. Also, you will need to add the dependencies for the APIs in the “build.gradle” file, in order for Android Studio to recognise that you are using them.

A more detailed walkthrough on using CameraX and ML Kit to detect and track objects can be found in our previous blog here.

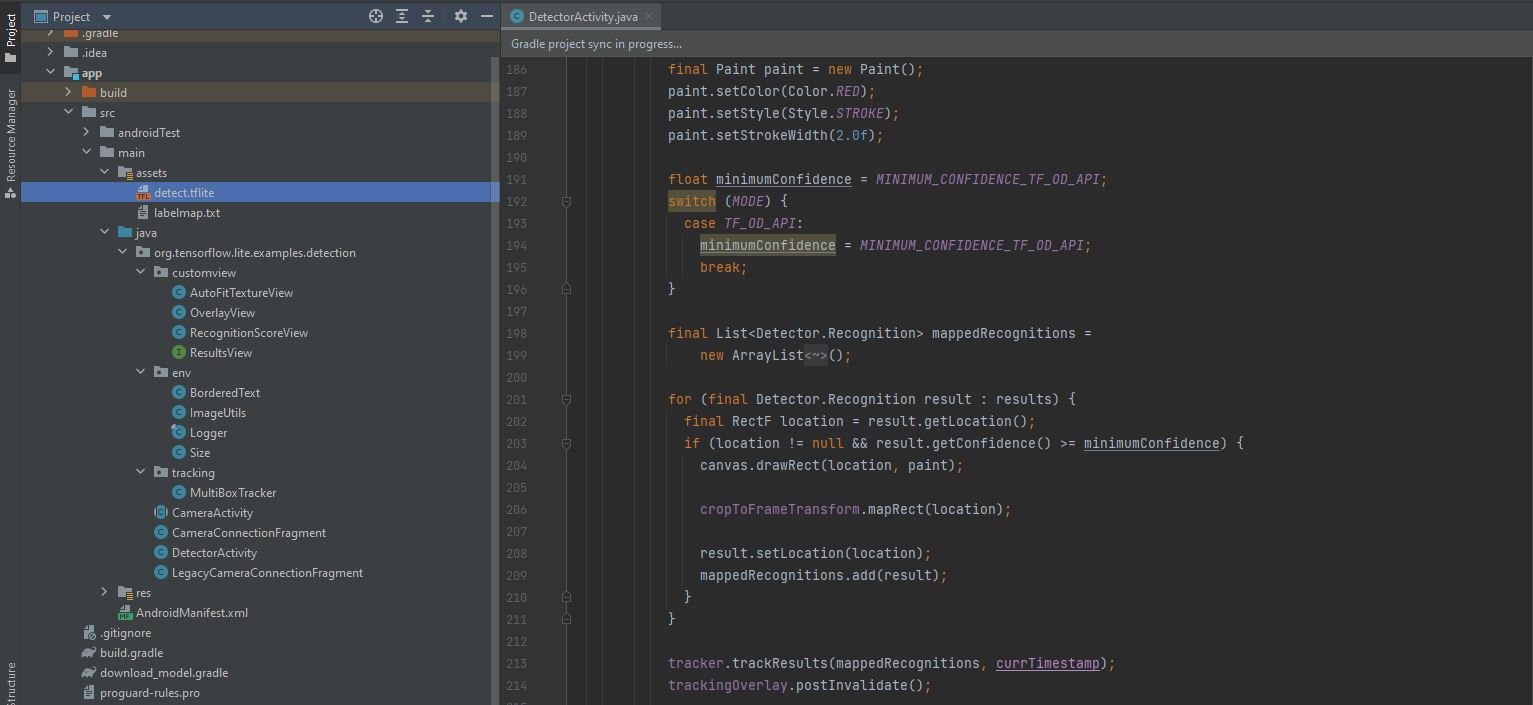

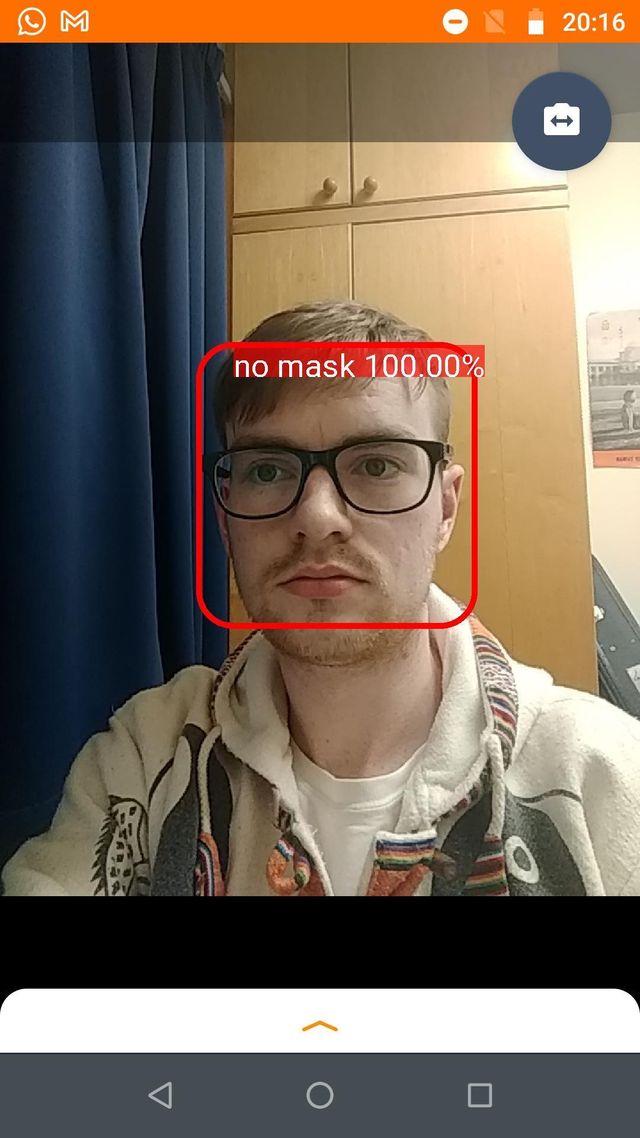

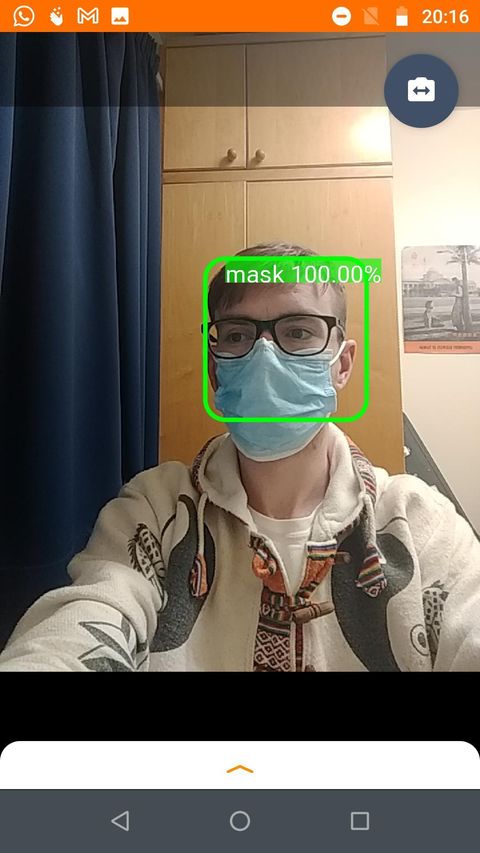

Creating the application this way is simplistic, and the specific classes for the model would be a person wearing a mask and not wearing a mask. A better way to do this would be to initially detect a face using the ML Kit, and then use a TensorFlow model to detect whether the detected face is wearing a mask or not. TensorFlow provides a very useful demo application to help people learn how to use their API to detect objects, and this can be a good platform to build off of for creating a more accurate facemask detection app.

A good example of a working application created using this technique can be found here, along with a more detailed insight of the code.

A Complete Platform

The VIA SOM-9X35 module combined with the VIA VAB-935 carrier board provides a complete platform that facilitates the development of intelligent vision applications for edge AI devices. For more information, please visit the webpage via the link here.